We’ve left a decade marked by technology churn, change, and surprises—nowhere more so than in cybersecurity.

What happened and what have we learned?

Before 2010, hacks were generally driven by personal or financial gain. That year, the Stuxnet worm changed everything. Attributed to US and Israel intelligence services, Stuxnet targeted Supervisory Control And Data Acquisition (SCADA) systems that monitor and control industrial processes like those in water and power grids. The malware targeted centrifuges supporting Iran’s nuclear program, effectively destroying equipment at several locations.

While nation-on-nation cyberattacks began prior to 2010, Stuxnet brought the power of digital weapons into full view. Nation-states have resources that are beyond the wherewithal of independent hacker groups and the right malicious code could not only steal money and personal data, but also destroy physical infrastructure. In 2015, Russia advanced this paradigm by cyberattacking Ukraine’s power grid, cutting off power before its military invaded the Crimea. For the first time, hacking became an integral part of warfare.

Such warfare can extend beyond military targets as we learned in 2014 when hackers linked to North Korea’s intelligence services turned their attention to Sony Pictures Entertainment. The objective? Prevent Sony from continuing to promote its film, The Interview, that rankled North Korea’s despotic ruler, Kim Jong-Un. After the film was distributed, hackers attacked the studio’s network and exposed data and emails online they used as propaganda to discredit the filmmaker. Two years later, hackers executed the most notorious political attack to date on the Democratic National Committee, exposing emails and documents aimed at influencing the American presidential election.

Concurrently, the past decade witnessed a rise in the sophistication and scale of traditional attacks. For example, in 2013, inventive cybercriminals used point-of-sale (POS) malware to collect payment card information on some 40 million Target shoppers. In 2016, a carefully-timed hack of Bangladesh’s central bank attempted to steal approximately $1 billion from the bank’s account at the Federal Reserve Bank of New York. The thieves skillfully used the trusted SWIFT network that financial institutions rely on to verify legitimate transactions between them. Typos, of all things, prevented most of the transfers from going through, but some $81 million was still lost.

Authorities suspected North Korea was behind the heist. An official of the National Security Agency noted that if this suspicion is correct, “a nation state is robbing banks.”1 Indeed, some have asserted that North Korea may be funding its weapons programs with the spoils of its state-sponsored hacks.

Even the National Security Agency (NSA) proved vulnerable. Starting in 2016, the NSA was somehow penetrated, and a hacker group known as the Shadow Brokers released some of the agency’s undisclosed vulnerabilities into the wild. One of these exploits was used to create the WannaCry encryption malware that helped spread the global ransomware scourge. WannaCry is attributed to North Korea, but Russia also reportedly developed ransomware, such as NotPetya and Bad Rabbit.

By hacking standards, ransomware exploits are relatively simple and the tools are available online. They do not require degrees in software engineering, putting them in reach of even average hackers. Raj Samani, chief scientist and fellow at McAfee, said that today, “even an 11-year-old could mount and run a ransomware campaign.”2

A Decade of Hacker Innovation

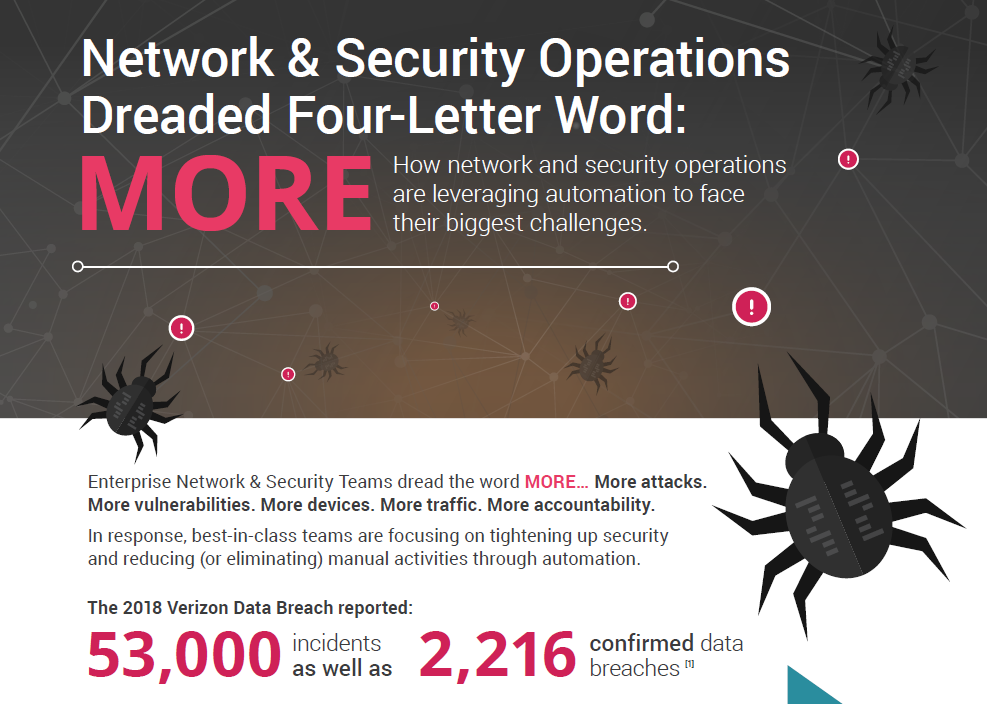

What have we learned from hackers’ relentless ingenuity during the 2010s? Breaches will continue, hackers are becoming more advanced, their tools are both improving and proliferating, and the stakes for the rest of us are higher. We’re even seeing cybercrime-as-a-service, meaning bad actors can outsource.

So what can we do?

We can start by understanding that the problem includes the rapid pace with which networking environments change. Enterprises, large and small, public and private, are building applications with containers in clouds, technologies that offer distinct advantages but which also present security issues. Security teams and DevOps teams, which build containerized, cloud-based applications, are still too often siloed. The inherent conflict is DevOps wants to launch applications quickly while security wants time to review the application.

Going forward, application security will require the integration of security teams into the DevOps build process. This will ensure that vulnerabilities are remediated and risks well understood by all stakeholders. In the right framework, security and agility will complement each other.

The integration of DevOps and security is essential to safeguard production applications, but it doesn’t address vulnerabilities elsewhere in the network, such as firewalls, routers, servers, and even CPUs, as well as expanding but fuzzy network edges and careless users. And it doesn’t address that networks are more complex and dynamic than ever.

Security teams that simply react to breaches by putting out fires face a truly Sisyphean task. Instead, we must operate more intelligently. A raft of new security companies offer excellent tools, but even the most effective resources won’t help without security professionals to implement them. SIEMs only issue alerts; they don’t take any action. No enterprise has enough security professionals to examine every alert SIEMs generate 24/7, and the potential risks are significant. In 2018, even after discovering that hackers had penetrated a key system, Marriott didn’t realize for several months that customer data—lots of it—had been stolen. It also learned that hackers had been in the system for four years. This case is hardly unique. For many of the most destructive assaults, bad actors were lurking undetected on networks weeks, months, even years before the attacks.

Security is responsible for establishing compliance benchmarks, but ensuring compliance is challenging when the network is relentlessly churning with changing users, apps, permissions, and connectivity. It’s nearly impossible for under-staffed security teams to keep daily track of their on-site and cloud infrastructures, as well as work with DevOps. How many enterprises actually know their state of compliance at any given moment? An organization may have a successful audit one day and unwittingly introduce new vulnerabilities the next.

And there’s basic housekeeping like updating security patches. The theft of personal data of some 145.5 million people in the Equifax hack of 2017 was caused by the failure to patch a critical server.

To keep pace with the evolving threat landscape, enterprises need to go well beyond manually managing their network security. They need to automate their processes. Automation means knowing that rules, permissions, and patches are always correct and up-to-date. It means visibility across the environment even though it changes. Automatic change tracking, for example, tracks every request as it occurs and ensures changes expire when scheduled, eliminating possible liabilities. When network security management is automated, compliance is continuous, understood, and documented year-round.

Hacker persistence and innovation over the last decade indicate that the 2020s could be fraught with peril. But organizations can turn to their own persistence and innovation to defend themselves. They can reduce mistakes and oversights and mitigate risks by ensuring their networks are constantly compliant with security policies and regulations. Minimizing the gap between the current state of compliance and the desired state of compliance is common sense preparedness, and it can only be achieved through automation.

Download the infographic to learn more about how automation can help address the growing frequency and sophistication of cyberattacks.

Ready to Learn More

Get a Demo